Archana Arakkal, Practice Lead: Intelligent Data Engineering

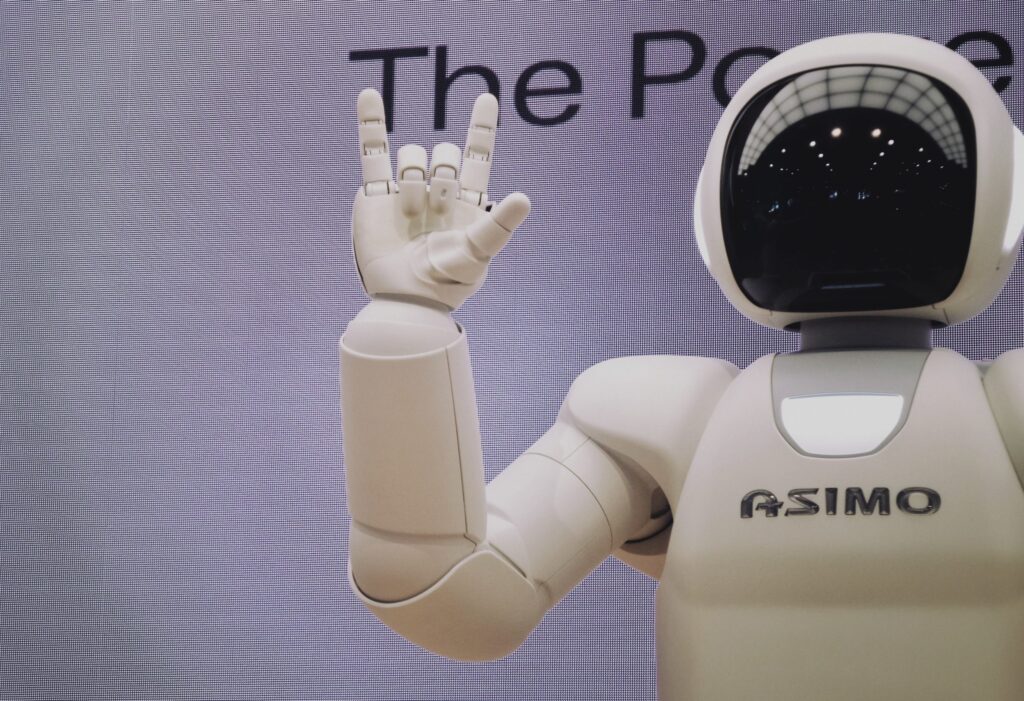

Every day as an ML Engineer I seek new ways to provide value to further enhance our lifestyles through AI and while there are several problems seeking solutions and we may think that AI could be these solutions, very often a glass ceiling is reached where the limitations are glaringly obvious.

As an AI enthusiast, I speak for many when I say that AI is great, but AI is never a replacement for human beings. The very nature of AI is artificial and while there are benefits that AI may offer, we need to always ground our thinking around what this means for human beings and the society we live in, and it is even more important to implement these human-centric solutions sooner rather than later.

Our saving grace now is AI ethics and Responsible AI – but what exactly does this mean for us as a society?

Taking it back to first principles: “Ethics are the moral principles that govern a person’s behavior or the conducting of an activity” another definition is “the branch of philosophy that involves systematising, defending, and recommending concepts of right and wrong behavior.”

For the sake of not debating morality, I’d like to take a step into what determines whether something is wrong or right and by extension what parameters determine the use of AI in a morally sound manner.

Considering that AI is attempting to mimic human intelligence (often unsuccessfully) would it not make sense to use concepts such as the universal declaration of human rights (UDHR) to govern AI ethics – a recent report titled “Artificial Intelligence and Human Rights: Opportunities and Risks” seems to agree and this is a basic argument “The UDHR provides us with a (1) guiding framework that is (2) universally agreed upon and that results in (3) legally binding rules—in contradistinction to “ethics”, which is (1) a matter of subjective preference (“moral compass,” if you will), (2) widely disputed, and (3) only as strong as the goodwill that supports it. Therefore, while appealing to ethics to solve normative questions in AI gets us into a tunnel of unending discussion, human rights are the light that we need to follow to get out on the “right” side. Or so the argument goes”.

As a practitioner in the field of AI, I can say that purely relying on UDHR is not going to solve the ethical dilemma we have with AI ethics since I believe the argument is an overestimation of the capabilities of UDHR. Ethics are severely underestimated and we may be more aligned than we think we are when it comes to a moral compass.

A good example of highlighting this conundrum between UDHR and ethics is the classic case of utilising an AI system as a diagnostic tool within the healthcare industry.

There are lists of potential positives and negatives that can be addressed; the positives being the accuracy and efficiency of diagnostic AI tools have a positive impact on the right to life, liberty, security, adequate standards of living, and education. The contrast being that data is required to construct these tools which has a negative impact as it infringes on the right to privacy. The solution could be developing methods through which these diagnostic tools could be created without violating privacy and this may be the obvious alternatives that several AI practitioners are taking, however what if the situation arises where we may not necessarily be able to respect privacy to its full extent and the patients who require the diagnostic tools are suffering/dying right now?

UDHR may not necessarily solve that problem but ethical reasoning such as the Kantian framework may be a start for example in the context of de-identified data that could be traced back to individuals only through sophisticated means and whether there would be a strict rule against it. And in theories of social justice, we would look at the effects of these decisions on different segments of society.

In essence, we have established that it is absolutely essential that we combine ethical reasoning in combination with human rights in order to establish a case by case understanding around AI ethics and Responsible AI, however having a loosely composed framework is not comforting enough to ensure that AI is used ethically.

In an attempt to rectify this lack of structure, several organisations such as the European union have proposed “AI regulation.” However, attempts towards such a framework have drawbacks since the bill assesses the scope of risk associated with various uses of AI and then subjects these technologies to obligations proportional to their proposed threats – thereby promoting companies to adopt AI technologies as long as operational risk is low which does not necessarily align with human rights risk.

The obvious gap with regulations is that AI systems are not obliged to respect fundamental freedoms similar to the rights constructed to protect against government intrusion. Although there have been attempts to rectify this utilising “Europeans Unions General Data Protection Regulation” which allows users to control their personal data and ensures that companies respect those rights – the solution does not permit users to mobilise their human rights and there has been no education in terms of understanding the value of safeguarding their personal information.

The regulations that have been created try to address the balance between subjective interests of humans as well as those of the industry. They try to protect human rights while ensuring that the laws adopted don’t impede technological progress. But this balancing act often results in merely illusory protection, without offering concrete safeguards to citizens’ fundamental freedoms.

To achieve this, the solutions adopted must be adapted to the needs and interests of individuals, rather than assumptions of what those parameters might be. Any solution must also include citizen participation. A good example of how ethics and human rights can be balanced out to solve the AI ethics conundrum is the “human rights by design” approach. The approach covers the following: “A “human rights by design” approach needs to be capable of addressing human rights issues with short-, medium-, and long-term implications. These might include:

- How do we interpret key human rights concepts, such as informed consent or remedy, in the context of big data analytics, the internet of things, and artificial intelligence?

- How do we involve rights holders in the innovation process for products or services that might have millions or billions of users?

- What are the most effective ways to address the known human rights risks of disruptive technologies, such as the risk that algorithm-based decision-making can result in discriminatory outcomes (such as in the areas of housing, credit, employment, health, and public services)?

- How do we address novel human rights risks that emerge from the use of disruptive technologies, such as land rights and airspace in the age of drones?

- What use cases for disruptive technologies in different industries—such as agriculture, financial services, healthcare, or mining—will present the most salient human rights risks and opportunities?”

With all these variations and attempts to solving the AI ethics dilemma, it has become clear that as a human race we must be more conscious when adopting AI solutions as well as when creating AI solutions, remembering not only the data-centric approach but also the human-centric approach that will not only ensure that we are securing ourselves for a successful future but also protecting those that are important (the inspiration behind AI) – humans. Leveraging on ethical reasoning and human rights with a more inclusive approach is the start to truly ensuring that AI ethics and Responsible AI is a reality but the decision to incorporate this approach must be decided from the start and the responsibility lies with all of us i.e. the solution builders as well as the consumers.

Now I would like to make the alteration to the Charles Wheelan quotes:

“Change is inevitable; but progress depends on what we do with that change and who we include in that change.”